Recently a few people have tagged me about Samsung’s 200MP sensor which claimed to pack 10000 e- full well capacity into a 0.6 um pixel. This means that Samsung’s FWC/area density is 10 times higher than your ordinary camera sensor. For example a 6 um pixel from a 24 MP full frame sensor has a FWC of less than 100K e-. To put it another way, the native ISO of Samsung’s sensor is ISO10, which sounds crazy. Given that the size of a full frame sensor is 12 times bigger than Samsung’s smartphone sensor, basically the total amount of FWC is in the same ball park.

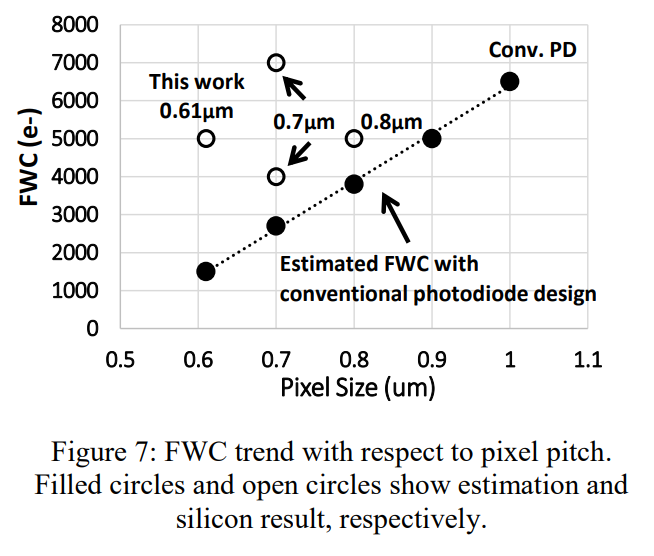

I have to admit I was a bit cocky at first and didn’t take it seriously. How is it even possible? People were so excited about Nikon’s ISO64 and now you’re telling me smartphone sensors can already reach ISO10? Plus it’s Samsung, the king of cheating. So you can understand why I was skeptical about it. But later I read several papers from Samsung/Sony/OV and realised that this is nothing new. Here is a graph from OV’s paper which shows that smartphone sensors with tiny pixels generally have much higher FWC density than older sensors.

So they improved the process technology somehow but I’m not going to pretend that I know semiconductor physics very well. I do need a new phone anyway, so I bought an S23 Ultra, which is equipped with this magic sensor.

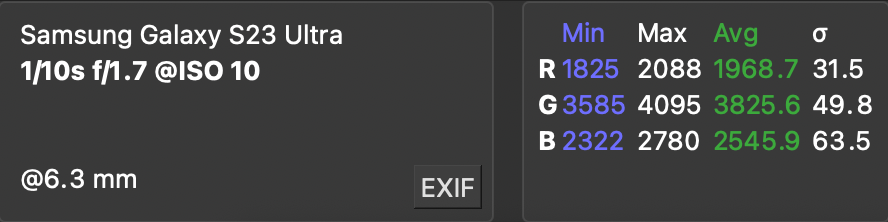

The camera does provide ISO10 but it’s only available in the auto mode. You can only set it to ISO50 manually. This makes me wonder if it’s just “extended ISO” and it definitely makes the testing a bit trickier. But anyway, here are two RAW readings from a Samsung S23U and my A7C:

As you can see Samsung has a much longer shutter speed and a slightly larger aperture. All in all the exposure value is 11.2x larger than the A7C, basically making up for the sensor size difference. For the green channel it utilises 3825/4096 = 91% of it’s FWC (the bit depth is only 12 bit). Take the 11.2x exposure difference into consideration then it’s actually 91/11.2 = 8.1%, whereas A7C utilises 9115/15860 = 57% of its FWC.

To put it more simply, it’s basically ISO14 vs ISO100. The FWC density is 7 times higher on the Samsung sensor!

WOW! Not quite 10 fold but still very impressive. The SNR difference here is 9115/134 = 68 vs 3825/50 = 76.5. So Samsung actually yields higher SNR in this case! However I have to say my test setup is not the most ideal one. The test subject is not a light box but a computer screen, so you have issues like flickering or Moiré affecting the SNR value. So don’t take it too seriously. But it’s obvious that Samsung is not using tricks like exposure bracketing, otherwise the SNR should be significantly lower. What you see here is the proof of real high FWC.

I also did another test to see if the ISO10 setting is extended or not but I think you already know the answer. It’s not. So why did they limit it in the manual mode? I have no logical explanation.

This means that when the light is good, a smartphone’s SNR is actually quite comparable to a full frame sensor.

Damn… didn’t expect that. We have to face the reality that smartphone sensors are way more advanced than camera sensors now. Sony cameras desperately need this kind of deep FWC sensors because with their stupid 1/8000 turtle shutter speed you can’t even use F1.4 in the daylight.

On the other hand this doesn’t really change my opinion about smartphone cameras. I’ve said this years ago: smartphones can easily achieve high dynamic range with fast exposure bracketing, even with moving subjects (as long as they’re not too fast… ). Using a high FWC sensor instead of exposure bracketing is interesting from a technical point of view, but it’s still not as good as exposure bracketing.

There are also barriers that can’t be broken through so easily, like low-light performance and telephoto performance. Here is a comparison between RX100m7 @ 200mm and S23U @ 230mm (10x zoom):

You know which is which… can’t beat the physical limitation from the lens itself. However, it does help me read the menu in a McDonald, and that’s all I’m asking for from a smartphone.

And interestingly Samsung’s software is still not there. You want RAW output? You need to go to the PRO mode first, and you lose all the computational photography magic, like fake bokeh/exposure bracketing/night mode. Samsung now has an Expert RAW mode like the PRO mode is not expert enough. However after some testing I’ve realized that it can only output bracketed RAW. No fake bokeh, no night mode. Why do they have to make a new mode that requires you to dive into the menu instead of just making a “HDR RAW” switch in the PRO mode? I have no idea. Anyways if you want something more professional from a smartphone the experience totally sucks. And interestingly the bracketed RAW seems to be fake. Many people have claimed that it’s just a JPG image in a DNG container and you can’t recover any shadow details. I don’t even bother to try given how limited the Expert RAW mode is anyway. Definitely not going to use it.

I see some other users claiming that GCAM is way better than the stock camera APP. I gave it a try and couldn’t disagree more.

It’s funny that after so many years of development, it seems to me that the most useful computational photography tricks are still coming from cameras, like Olympus’s live bulb mode or emulated ND. Sony’s APPs were also very good but sadly they are gone for good now.